Qtest Private Slots

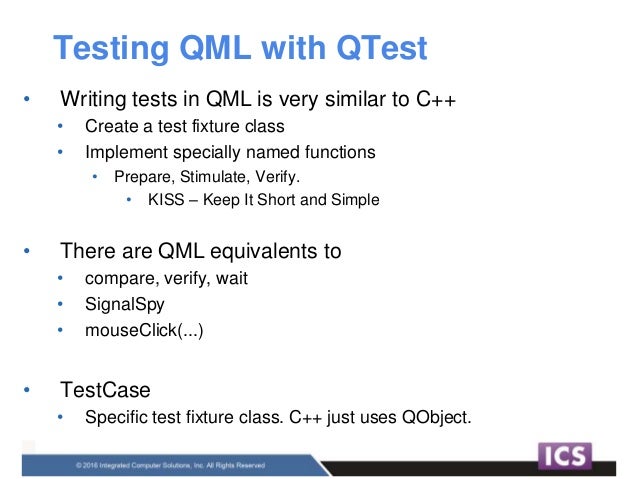

I have found more convenient way to do this. First, all private methods should be private slots. Then you create an instance of the class: Foo a; Then we can use QMetaObject::invokeMethod to call any slot that method has (public or private). So if we want to call method Test, we can do it like this. To write a C unit test with Qt you need to create a class which inherits from QObject and implements at least one private slot. Each private slot is an independent test. The simplest unit test class is something like this.

In first post of testing series I’ve mentioned about multiple targets as a starting point. Now it’s time to add a class to show testing utility. In main target let’s have a class called Calculations. Goal is to test this Calculations class methods.

Project source code can get from repository.

There is a class in Main_Target to test our code and cover it up. It’s time to have a testing class. Let’s call it TestMathCalculations. It will be defined in Test_Target and must be derived from QObject and testing methods are private slots. In this post I want to keep going on with just QCOMPARE macro to test output variables. For the rest of macros, you can check Qt’s official documentation.

I gave QCOMPARE macros wrong output results too. It’s to show what happens when you get unexpected test results.

Now there is an issue, whenever Calculations class header file is included in secondary (Test_Target) project, it cannot access it. So it’s class must be packed and exported. In this chapter’s branch I’ve shredded the source app into two different sub targets too. My goal was to separate main.cpp from classes.

Trick is at the bottom actually. It exports project as a library with alias of sub::mkmath. This helps us to bind it with Test_Target to include Calculations class header file.

Now we have a class and another class to test this class. Build pattern from CMake now it’s call the test over Test_Target’s main. I’m showing the most primitive way of test. It’s not a way to do in production actually. Not even close to Object Oriented logic & flow. It’s a tutorial. Do not apply like this in your projects.

QTest::qExec I’m quoting from Qt’s documentation, because it’s so clear: “Executes tests declared in testObject. In addition, the private slots initTestCase(), cleanupTestCase(), init() and cleanup() are executed if they exist”

int QTest::qExec(QObject *testObject, int argc = 0, char **argv = nullptr)

Remember that testing class was derived from QObject class, because qExec need a QObject pointer and env arguments if you supply. I’m using it as a return value if I get errors it gives me with an error code.

Qt Test is a framework for unit testing Qt based applications and libraries. Qt Test provides all the functionality commonly found in unit testing frameworks as well as extensions for testing graphical user interfaces.

Qt Test is designed to ease the writing of unit tests for Qt based applications and libraries:

| Feature | Details |

|---|---|

| Lightweight | Qt Test consists of about 6000 lines of code and 60 exported symbols. |

| Self-contained | Qt Test requires only a few symbols from the Qt Core module for non-gui testing. |

| Rapid testing | Qt Test needs no special test-runners; no special registration for tests. |

| Data-driven testing | A test can be executed multiple times with different test data. |

| Basic GUI testing | Qt Test offers functionality for mouse and keyboard simulation. |

| Benchmarking | Qt Test supports benchmarking and provides several measurement back-ends. |

| IDE friendly | Qt Test outputs messages that can be interpreted by Visual Studio and KDevelop. |

| Thread-safety | The error reporting is thread safe and atomic. |

| Type-safety | Extensive use of templates prevent errors introduced by implicit type casting. |

| Easily extendable | Custom types can easily be added to the test data and test output. |

Creating a Test

To create a test, subclass QObject and add one or more private slots to it. Each private slot is a test function in your test. QTest::qExec() can be used to execute all test functions in the test object.

In addition, there are four private slots that are not treated as test functions. They will be executed by the testing framework and can be used to initialize and clean up either the entire test or the current test function.

initTestCase()will be called before the first test function is executed.cleanupTestCase()will be called after the last test function was executed.init()will be called before each test function is executed.cleanup()will be called after every test function.

If initTestCase() fails, no test function will be executed. If init() fails, the following test function will not be executed, the test will proceed to the next test function.

Qtest Private Slots Online

Example:

For more examples, refer to the Qt Test Tutorial.

Building a Test

If you are using qmake as your build tool, just add the following to your project file:

If you would like to run the test via make check, add the additional line:

See the qmake manual for more information about make check.

If you are using other build tools, make sure that you add the location of the Qt Test header files to your include path (usually include/QtTest under your Qt installation directory). If you are using a release build of Qt, link your test to the QtTest library. For debug builds, use QtTest_debug.

See Writing a Unit Test for a step by step explanation.

Qt Test Command Line Arguments

Syntax

The syntax to execute an autotest takes the following simple form:

Substitute testname with the name of your executable. testfunctions can contain names of test functions to be executed. If no testfunctions are passed, all tests are run. If you append the name of an entry in testdata, the test function will be run only with that test data.

For example:

Runs the test function called toUpper with all available test data.

Runs the toUpper test function with all available test data, and the toInt test function with the test data called zero (if the specified test data doesn't exist, the associated test will fail).

Runs the testMyWidget function test, outputs every signal emission and waits 500 milliseconds after each simulated mouse/keyboard event.

Options

Logging Options

The following command line options determine how test results are reported:

-ofilename,format

Writes output to the specified file, in the specified format (one oftxt,xml,lightxmlorxunitxml). The special filename-may be used to log to standard output.-ofilename

Writes output to the specified file.-txt

Outputs results in plain text.-xml

Outputs results as an XML document.-lightxml

Outputs results as a stream of XML tags.-xunitxml

Outputs results as an Xunit XML document.-csv

Outputs results as comma-separated values (CSV). This mode is only suitable for benchmarks, since it suppresses normal pass/fail messages.-teamcity

Outputs results in TeamCity format.

The first version of the -o option may be repeated in order to log test results in multiple formats, but no more than one instance of this option can log test results to standard output.

If the first version of the -o option is used, neither the second version of the -o option nor the -txt, -xml, -lightxml, -teamcity or -xunitxml options should be used.

If neither version of the -o option is used, test results will be logged to standard output. If no format option is used, test results will be logged in plain text.

Test Log Detail Options

The following command line options control how much detail is reported in test logs:

-silent

Silent output; only shows fatal errors, test failures and minimal status messages.-v1

Verbose output; shows when each test function is entered. (This option only affects plain text output.)-v2

Extended verbose output; shows each QCOMPARE() and QVERIFY(). (This option affects all output formats and implies-v1for plain text output.)-vs

Shows all signals that get emitted and the slot invocations resulting from those signals. (This option affects all output formats.)

Testing Options

The following command-line options influence how tests are run:

-functions

Outputs all test functions available in the test, then quits.-datatags

Outputs all data tags available in the test. A global data tag is preceded by ' __global__ '.-eventdelayms

If no delay is specified for keyboard or mouse simulation (QTest::keyClick(), QTest::mouseClick() etc.), the value from this parameter (in milliseconds) is substituted.-keydelayms

Like -eventdelay, but only influences keyboard simulation and not mouse simulation.-mousedelayms

Like -eventdelay, but only influences mouse simulation and not keyboard simulation.-maxwarningsnumber

Sets the maximum number of warnings to output. 0 for unlimited, defaults to 2000.-nocrashhandler

Disables the crash handler on Unix platforms. On Windows, it re-enables the Windows Error Reporting dialog, which is turned off by default. This is useful for debugging crashes.-platformname

This command line argument applies to all Qt applications, but might be especially useful in the context of auto-testing. By using the 'offscreen' platform plugin (-platform offscreen) it's possible to have tests that use QWidget or QWindow run without showing anything on the screen. Currently the offscreen platform plugin is only fully supported on X11.

Benchmarking Options

Qtest Private Slots Casino

The following command line options control benchmark testing:

-callgrind

Uses Callgrind to time benchmarks (Linux only).-tickcounter

Uses CPU tick counters to time benchmarks.-eventcounter

Counts events received during benchmarks.-minimumvaluen

Sets the minimum acceptable measurement value.-minimumtotaln

Sets the minimum acceptable total for repeated executions of a test function.-iterationsn

Sets the number of accumulation iterations.-mediann

Sets the number of median iterations.-vb

Outputs verbose benchmarking information.

Miscellaneous Options

Qtest Private Slots Games

-help

Outputs the possible command line arguments and gives some useful help.

Creating a Benchmark

To create a benchmark, follow the instructions for creating a test and then add a QBENCHMARK macro to the test function that you want to benchmark.

The code inside the QBENCHMARK macro will be measured, and possibly also repeated several times in order to get an accurate measurement. This depends on the selected measurement back-end. Several back-ends are available. They can be selected on the command line:

| Name | Command-line Argument | Availability |

|---|---|---|

| Walltime | (default) | All platforms |

| CPU tick counter | -tickcounter | Windows, macOS, Linux, many UNIX-like systems. |

| Event Counter | -eventcounter | All platforms |

| Valgrind Callgrind | -callgrind | Linux (if installed) |

| Linux Perf | -perf | Linux |

In short, walltime is always available but requires many repetitions to get a useful result. Tick counters are usually available and can provide results with fewer repetitions, but can be susceptible to CPU frequency scaling issues. Valgrind provides exact results, but does not take I/O waits into account, and is only available on a limited number of platforms. Event counting is available on all platforms and it provides the number of events that were received by the event loop before they are sent to their corresponding targets (this might include non-Qt events).

The Linux Performance Monitoring solution is available only on Linux and provides many different counters, which can be selected by passing an additional option -perfcounter countername, such as -perfcounter cache-misses, -perfcounter branch-misses, or -perfcounter l1d-load-misses. The default counter is cpu-cycles. The full list of counters can be obtained by running any benchmark executable with the option -perfcounterlist.

- Notes:

- Using the performance counter may require enabling access to non-privileged applications.

- Devices that do not support high-resolution timers default to one-millisecond granularity.

See Writing a Benchmark in the Qt Test Tutorial for more benchmarking examples.